Abstract

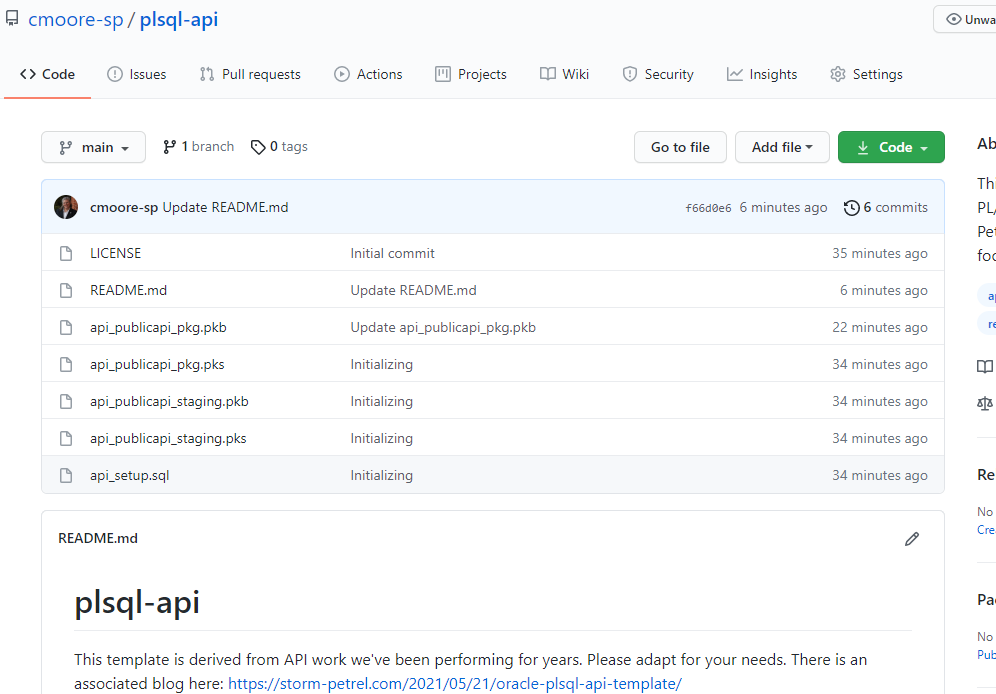

A key purpose of this blog entry is to communicate that we are publishing a template for PL/SQL-based API. It is located on my GITHub page (https://github.com/cmoore-sp/plsql-api.git) and communicates with a public website hosting public APIs.

Introduction

My first interesting exploration with writing API with Oracle APEX and PL/SQL involved exchanging data and documents between our database and AWS S3. It has been four years since I published that effort. In the ensuing years, our team continued to write API. We have benefited and appreciated the progressive improvements that Oracle made with writing these digital connectors. Release by release, year by year, Oracle smooths the process of connecting via HTTP and managing JSON data. In APEX 20.1, the team introduces us to APEX based wizard to exchange data via HTTP links.

A key purpose of this blog entry is to communicate that we are publishing a template for PL/SQL-based API. It is located on my GITHub page (https://github.com/cmoore-sp/plsql-api.git) and communicates with a public website hosting public APIs. I initially worked through the US Weather Service’s site. But when I hit it from Oracle, it denied me for the lack of authentication whereas I easily got data from Postman. C’est la vie. We’ve been using and refining this template for about 18 months, maybe longer.

Rarely is our goal writing code line by line. I reviewed our own work related to a data exchange link to the U.S. government’s FEMA data site. The version I wrote with the best tools at hand required 545 lines to parse JSON data. In 2021, that same effort took 124 lines. A quarter of the code to achieve the same results. The code is simpler, shorter, and easier to read. That all boils down to fewer errors and reduced development costs.

This is the first of a series blog posts related to writing application programming interfaces with Oracle PL/SQL. As I write this, I celebrate the APEX based efforts, which reduces code even further.

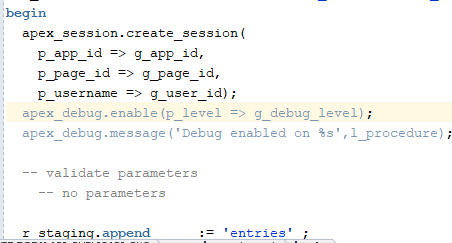

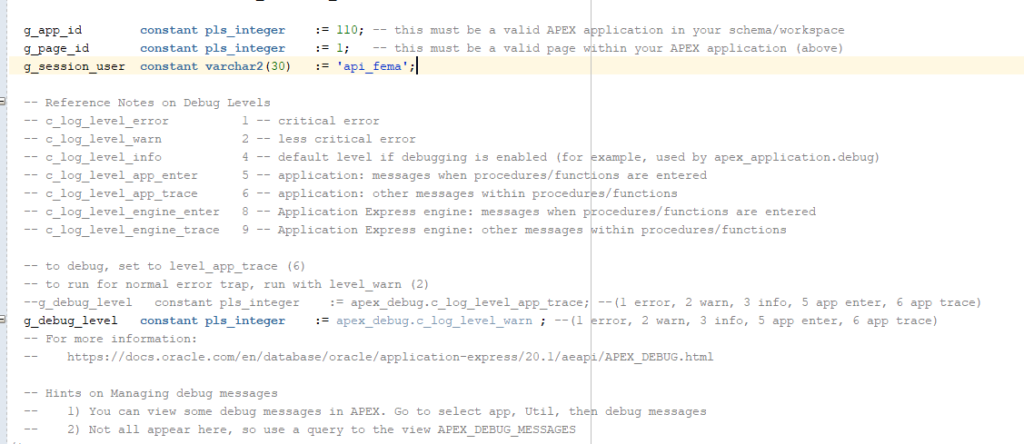

This blog is part of a series. The first dealt with error trapping and debug logging. That can be found here: Oracle APEX Standardize Error & Debugging

Design and Build an API with Oracle

Early Days

Step 1 research and explore. And do your early explorations with tools such as cURL or Postman or some tool other than Oracle. Sure, Oracle is the final destination so the data can mix happily with your tables. Tools like Postman provide better debugging and visibility into the feedback from the API host.

Frankly, the error messaging from Oracle is a bit too blunt – non-specific and incomplete. Postman-like tools provide granular assessments including status (200-OK), time, size. Postman provide a cURL version of the effort and the full suite of headers is visible. It is a Leatherman or Swiss Army knife of utilities.

Furthermore, given many of us use Oracle from a cloud-based site, launching API tests from there (AWS or Oracle Cloud or whereever) introduces several other layers of variables and potential issues such as:

- Oracle-based Access Control Lists (ACL)

- Oracle’s management of HTTPS / SSL certificates

- Routing issues within the cloud environment

- Cloud-based Access Control Lists

- Cloud-based Firewalls and Web Application Firewalls

For example, our WAF denies stuff that looks like SQL – thinking it may be seeing an SQL injection attack. Scraping the Oracle’s 1980’s bias and the cloud-edge from early work saves those problems for later. Maybe it is all perfect, but in the early hours limit your variable and source of trouble.

While on Step 1 “Research and Explore”, read those API instructions carefully.

At Storm Petrel, we look for the ‘Long Pole in the Tent’ or that deep dark hole that will trap the effort. Somewhere in the API documentation and in your effort there is easy work and tough work. Something will hold the entire effort up – that dreaded long pole.

I tend to be suspicious of the authentication process. We tackle that first. We tried for months to nail down an API with PayPal and got utterly frustrated, so frustrated with shopped for another vendor. When we did, we researched the API documentation and access as an integral part of the purchase decision.

The cost of building and supporting an API ought to be part of the long-term analysis of selecting a remote partner. Many vendors provide a sandbox or developer’s access to the API. We pursue that before plonking down a credit card on recuring costs – well we did after the PayPal mess.

After years of writing and supporting API with Oracle PL/SQL and APEX, we state to ourselves – if it can be done with Postman it can be done with Oracle.

API Workflow and Table Architecture

With few exceptions, like my partner’s application to check the weather outside is window and the pool temperature, data from the outside ought to be buffered and isolated as it transitions into your curated databases. It is foreign data why trust it explicitly?

Our workflow and architecture goes as follows (when getting data from a remote site)

- Execute the HTTP GET

- Store the entire GET syntax in a table called API_STAGING

- API Name,

- Module name,

- Base URL

- Appended bit of the URL

- HTTP status code

- JSON response

- HTTP response

- Ok to Delete (a date field)

- A HTTP failure also stores the API staging data.

- This packages manages the API, and looping needed to fetch a large number of data rows, and error trapping.

- Store the entire GET syntax in a table called API_STAGING

- Parse the JSON data

- Using a loop, spin through the API_Staging data

- Query the new JSON data

- Merge data to intermediate Oracle table

- We create a table based on the JSON data

- We name fields as close as possible to the JSON names

- We add a few fields to dates of updates and add – basic management

- Mark rows in the API_Staging table that they can be deleted after the JSON data has been successfully placed into Oracle tables as needed

- Merge with the real data inside the application

- We can validate primary keys and foreign keys

- Confirm integrity of the data

- Isolate and report on any anomalous data

When updating remote data with a POST or PUT, the process goes in reverse. We progressively convert the data and log each action. When ready, we put the out-bound data (a blob or clob) in the API staging table.

Tables

- API Staging – tracks the API activity, behaves like a log for API calls and responses

- Intermediate Tables – tables that mimic the JSON data structures but using classic relational database tools. We mimic JSON field names where possible.

- Production Tables – These tables become the destination for the API data (or the source if sending out with a RESTful POST or PUT)

Data Merge

Historically, I have been comparing a few key elements of data to test if a change exists. Some API, such as FEMA, includes their last update date and a hash value. I can easily compare these. After examining work done by the Oracle APEX development team, I noticed that they are doing an MD hash of an entire row of data. I lifted their procedure into some tests recently.

They converted dates/timestamps to varchar2. They also converted numbers to varchar2. The first time I used their process, I got snagged by null values in fields. I updated the procedure to include an NVL. Now, new data gets inserted. Data that appears the same, I run through hash it, then compare old and new.

function api_publicAPI_MD5 (

P_ROW in api_publicAPI%rowtype

) return varchar2

as

l_procedure varchar2(100) := 'api_publicapi_staging.api_publicAPI_MD5';

begin

-- please convert all values to VARCHAR with consistent formats and manage nulls

-- such as to_char("P_ACTION_DATE",'yyyymmddhh24:mi:ss'), etc

return apex_util.get_hash(

apex_t_varchar2(

nvl(P_ROW.api_name,'^')

,nvl(P_ROW.description,'^')

,nvl(P_ROW.auth,'^')

,nvl(P_ROW.https,'^')

,nvl(P_ROW.cors,'^')

,nvl(P_ROW.link,'^')

,nvl(P_ROW.category,'^')

));

end api_publicAPI_MD5;

Template for API

As mentioned above, I am publishing an API template written in PL/SQL on my Github site. I endeavored to find an API site that did not require registration or authentication so that this package could run easily. API sites that are fully open run the risk of denial-of-service attacks. I respect the need for authentication. I did not include much guidance on authentication. We’ve rarely encountered the same rules twice. We plod through that effort after registering an account. Sorry.

The files include:

1. API_SETUP (procedure)

This procedure establishes a couple of tables

- API_STAGING for the logging of API data in a native format

- API_PUBLICAPI mimics the Public API data structure. Sorry for the awkward name. Our standard involves starting with “API_” then the name of the destination. In this case the destination is “PublicAPI”

2. API_PUBLICAPI_PKG

Again sorry about the stupid name. Our standard is API_Destination_PKG so when the damn thing is called PublicAPI, it looks odd. This package does the HTTP GET and stores the resultant data in the API_STAGING table. The package includes standard code for all normal aspects of a RESTful API. It also references a proxy. In some cases, we use an Apache server to proxy our calls. This permits us to bypass the misery of the Oracle certificate management stuff.

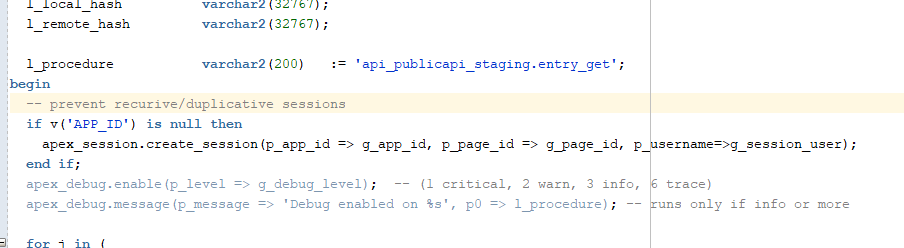

3. API_PUBLICAPI_STAGING

This package page loops through the API_STAGING table to parse the JSON data into an Oracle table. It has a few tricks for querying JSON data.

Close

I will cover detailed elements in upcoming blogs. We’ve have learned and struggled with JSON queries. Some of the features are not well documented.

Have fun, be safe.

Christina Moore (@cmoore_sp)